CS 3410: Computer System Organization and Programming

Welcome to CS 3410 for fall 2024! Here are some useful links:

- The course schedule for lecture topics and all deadlines

- Please actually read the syllabus

- A schedule of office hours

- Ed, for Q&A

- Gradescope, where assignments and exams happen

- Canvas, which we are mostly not using but which will have some attendance tracking information

- Prof. Sampson’s lecture doodles

- Prof. Guidi’s slides

Syllabus

CS 3410, “Computer System Organization and Programming,” is your chance to learn how computers really work. You already have plenty of experience programming them at a high level, but how does your code in Java or Python translate into the actual operation of a chunk of silicon? We’ll cover systems programming in C, assembly programming in RISC-V, the architecture of microprocessors, the way programs interact with operating systems, and how to correctly and efficiently harness the power of parallelism.

In fall 2024, CS 3410 has a different structure! Compared to recent offerings of 3410, we’ll focus more on the software view of hardware and less on the digital logic foundations of machine architecture. There are also a larger number of small assignments instead of a few big projects. We appreciate your patience while we build this new approach to teaching computer systems!

TL;DR

- All course communication will happen on Ed.

- Log in with your

netid@cornell.eduemail address. You should already have access. - You’re responsible for knowing everything that we post as announcements there. Ignore announcements at your own risk.

- Log in with your

- Homework hand-in and grading happens on Gradescope.

- There are 11 assignments.

- The deadline is usually Wednesday night at 11:59pm. See the schedule for details.

- You have 12 total “slip days” you can use throughout the semester. You may use up to 3 for a given assignment.

- Your lowest homework score will be dropped.

- There is one prelim and a final exam.

Organization

Announcements and Q&A: Ed

We will be using Ed for all announcements and communication about the course. Log in there with your netid@cornell.edu email address. The course staff will post important updates there that you really want to know about! Check often, and don’t miss the announcement emails.

You can also ask questions—about lectures, homework, or anything else—on Ed.

What to post. If you can answer someone else’s question yourself, please do! But be careful not to post solutions. If you’re not sure whether something is OK to post, contact the course staff privately. You can do that by marking your question as “Private” when you post it.

How to ask a good question. A good post asks a specific question. Here are some examples of bad posts:

- “Tell me more about broad topic X.”

- “Does anyone have any hints for problem Y?”

If you need help with a homework problem, for example, be sure to include what you’ve tried already, exactly where you’re stuck, and what you’re currently thinking about how to proceed. If you just ask for help without any evidence of effort, we’ll punt the question back to you for more details.

Never post screenshots of code. They are inaccessible, hard to copy and paste, and hard to read on small screens (i.e., phones). Use Ed’s “code block” feature and paste the actual code.

Use Ed, not email. Do not contact individual TAs or the instructors via email. Use private Ed posts instead. The only exception is for sensitive topics that need to be kept confidential; please email cs3410-prof@cornell.edu (not the instructors’ personal addresses) with those.

Assignments: Gradescope

You will submit your solutions to assignments and receive grades through Gradescope.

We try to grade anonymously, i.e., the course staff won’t know who we’re grading. So please do not put your name or NetID anywhere in the files you upload to Gradescope. (Gradescope knows who you are!)

Content

Grading

Final grades will be assigned with these proportions:

- Assignments: 30%

- Preliminary Exam: 25%

- Final Exam: 25%

- Topic Mastery Quizzes: 10%

- Participation: 10%

Assignments

Problem sets are usually due on Wednesday at 11:59 PM. See the course schedule. All assignments are individual. You’ll turn in assignments via Gradescope.

Slip days. You have a total of 12 slip days to use throughout the semester, of which you can use at most 3 for a given assignment. A “slip day” is a 24-hour penalty-free extension on an assignment deadline that you can use without even asking for permission. Use slip days to make your life easier when dealing with:

- routine illness

- minor injury

- travel

- job fairs

- job interviews

- large workloads in other courses

- extra-curriculars

- just getting overwhelmed

We trust you to use your slip days wisely. They often mean you have less time to work on the next assignment.

Dropped score. We will drop one score to calculate your final grade: that is, your lowest-scoring problem set won’t count, even if that score is zero. Use this policy to cope with extenuating circumstances, or that especially difficult week in your semester, by skipping one assignment.

Other lateness. Late submissions (beyond slip days) will not be accepted. In truly exceptional circumstances where slip days do not cut it, contact the instructors. Exceptional circumstances require some accompanying documentation.

Grade cap. In terms of your final course grade, assignment scores are capped at 85%. All scores above 85% will count as “full credit” and an A average; scores below 85% will be scaled accordingly (e.g., 80% on an assignment maps to a final-grade value of 94.1%). This policy is meant to help you focus holistically on learning what each assignment is trying to teach you, not on maximizing individual points.

Exams

There is one preliminary exams and a final exam. See the course schedule.

Makeup exams must be scheduled within the first three weeks of class. Check the schedule now to see if you have a conflict with another class and use a private Ed post to reschedule.

Topic Mastery Quizzes

Weekly topic mastery quizzes will help reinforce the lessons from a given week’s lectures. We’ll release the quiz on Sunday. The material will be covered in lectures that week. The quiz due date is the following Friday. These quizzes are also on Gradescope.

Because they’re meant to help you practice, grading on these quizzes is very forgiving:

- You are welcome to retake the quiz as many times as you like. We’ll keep your best attempt.

- The score is capped at 90%, so scoring 9/10 is “full credit” and counts the same as scoring 10/10.

- We will drop your two lowest scores.

No extensions are available on these quizzes.

Labs

CS 3410 has lab sections that are designed to help you get started on assignments. Each assignment has a first step that you should be able to complete in lab, with lots of help from the TA. There is nothing separate to turn in for each lab—you’ll turn in your work as part of the associated assignment.

Attendance is required. You must attend the lab section you are registered for. (If you need to change lab sections, please use the “swap” feature on Student Center to avoid losing your spot in the main course registration.) You are responsible for making sure that your attendance is recorded each time.

Participation

The “participation” segment of your grade has three main components:

- 4% for Lecture attendance, as measured by occasional Poll Everywhere polls.

- 4% for lab attendance, as recorded by the lab’s instructors.

- 2% for surveys:

- The introduction survey (on Gradescope) in the first week of class.

- The mid-semester feedback survey.

- The semester-end course evaluation.

We know that life happens, so you can miss up to 3 lab sections and 5 lectures without penalty.

Policies

Academic Integrity

Absolute integrity is expected of all Cornell students in every academic undertaking. The course staff will prosecute violations aggressively.

You are responsible for understanding these policies:

- Cornell University Code of Academic Integrity

- Computer Science Department Code of Academic Integrity

On assignments, everything you turn in must be 100% completely your own work. You may discuss the work in generalities with other students using natural language, but you may not show anyone else your code or look at anyone else’s code. Specifically:

- Do not show any (partial or complete) solution to another student.

- Do not look at any (partial or complete) solution written by another student.

- Do not search the Internet for solutions, on Google or Stack Overflow or anywhere else.

- Do not post solutions on Ed, except in private threads with course staff.

- Do ask someone if you’re confused about what the assignment is asking for.

- Definitely ask the course staff if you’re not sure whether or not something is OK.

Here’s the policy for exams: you may not give assistance to anyone or receive assistance of any kind from anyone at all (outside of the course staff. All exams are closed book.

This course is participating in Accepting Responsibility (AR), which is a pilot supplement to the Cornell Code of Academic Integrity (AI). For details about the AR process and how it supplements the AI Code, see the AR website.

Generative AI

You may not use generative AI tools (e.g., ChatGPT, Gemini, or Copilot) to generate any code or text that you turn in. All work you submit must be 100% hand-written.

Respect in Class

Everyone—the instructors, TAs, and students—must be respectful of everyone else in this class. All communication, in class and online, will be held to a high standard for inclusiveness: it may never target individuals or groups for harassment, and it may not exclude specific groups. That includes everything from outright animosity to the subtle ways we phrase things and even our timing.

For example: do not talk over other people; don’t use male pronouns when you mean to refer to people of all genders; avoid explicit language that has a chance of seeming inappropriate to other people; and don’t let strong emotions get in the way of calm, scientific communication.

If any of the communication in this class doesn’t meet these standards, please don’t escalate it by responding in kind. Instead, contact the instructors as early as possible. If you don’t feel comfortable discussing something directly with the instructors—for example, if the instructor is the problem—please contact the CS advising office or the department chair.

Special Needs and Wellness

We provide accommodations for disabilities. Students with disabilities can contact Student Disability Services at for a confidential discussion of their individual needs.

If you experience personal or academic stress or need to talk to someone who can help, contact the instructors or:

- Engineering academic advising

- Arts & Sciences academic advising

- Learning Strategies Center

- Let’s Talk Drop-in Counseling at Cornell Health

- Empathy Assistance and Referral Service (EARS)

Please also explore other mental health resources available at Cornell.

Fall 2024 Course Schedule

Overview

| Week | Monday | Wednesday | Assignment | Lab |

|---|---|---|---|---|

| Aug 26 | 1+1=2 | Intro, C | printf | C Intro |

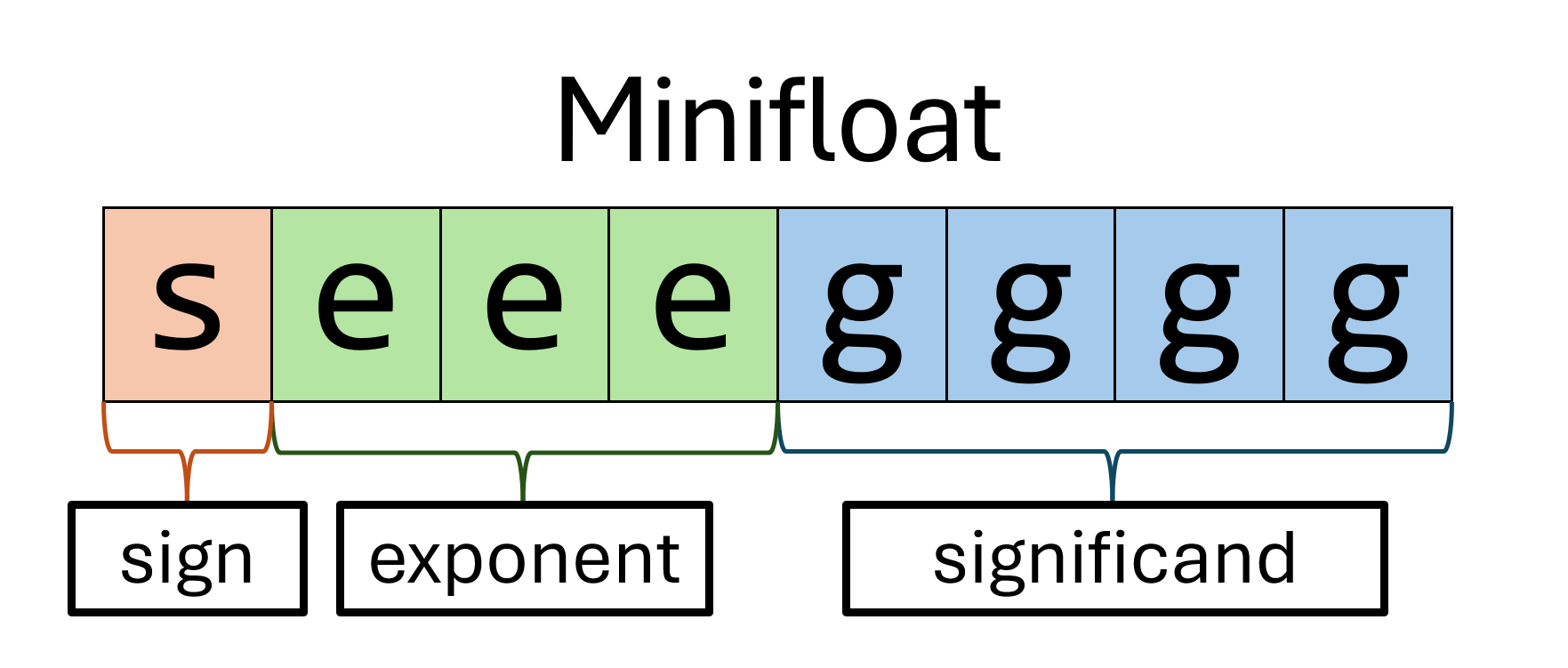

| Sep 2 | Labor Day | Float, Types | Minifloat | Float Practice |

| Sep 9 | Arrays & Pointers | Heap & Allocation | Huffman | Priority Queue |

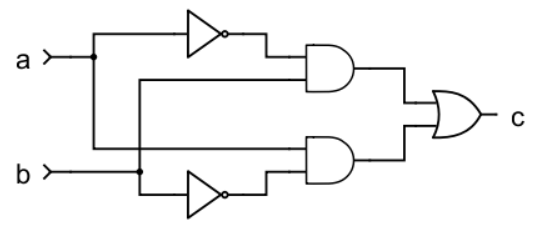

| Sep 16 | Gates, Logic | State | Circuits | Logic Intro |

| Sep 23 | FemtoProc, CPU | ISAs, RISC-V | CPU Sim | Processors |

| Sep 30 | More RISC-V | Control Flow | Assembly | Assembly & Review |

| Oct 7 | Calling Conv. | Calling Conv. | Prelim | Assembly |

| Oct 14 | Fall Break | Caches | Functions | RISC-V Practice |

| Oct 21 | Caches | Caches | Blocking | Cache Intro |

| Oct 28 | Processes | System Calls | Shell | System Calls |

| Nov 4 | Virtual Memory | Threads | Optional | |

| Nov 11 | Atomics | Synchronization | Concurrent | LR/SC Practice |

| Nov 18 | Parallelism | Parallelism | Raycasting | Threads |

| Nov 25 | Parallelism | Thanksgiving | No Lab | |

| Dec 2 | Parallelism | Parallelism | Review | |

| Dec 9 | GPUs? |

Assignments

Assignments are usually due on Wednesday at 11:59pm. The table above shows the week when the assignment is assigned; it’s due during the next week (with some exceptions; see the bold dates). So the deadlines are:

- printf: Sep 4

- Minifloat: Sep 11

- Huffman Compression: Sep 18

- Generating Circuits: Sep 25

- CPU Simulation: Oct 2

- Assembly Programming: Oct 11 (before Fall Break)

- Functions in Assembly: Oct 23

- Cache Blocking: Oct 30

- Shell: Nov 13

- Concurrent Hash Table: Nov 20

- Parallel Raycasting: Dec 9 (last day of class)

Lab Sections

Lab sections are mostly on Thursday. One is on Wednesday and one is on Friday. The work in each lab is meant to help you get started on the assignment that is out that week; the Lab column above indicates the part of the assignment we’ll do together in section. There is nothing separate to turn in from lab; the work you do in lab will get turned in as part of that week’s assignment.

Exams

There are two exams:

- One preliminary exam, on October 8 at 7:30pm

- The final exam, on December 13 at 7pm

Office Hours

We look forward to seeing you in office hours! Check out the schedule of available office hours in this Google Calendar, which is also embedded below.

With TAs

TA office hours are either:

- In person, in Rhodes 529.

- On Zoom, using links associated with the events in the calendar.

In-person office hours use a simple whiteboard queueing mechanism; Zoom office hours use Queue Me In.

Office hours do not happen on official Cornell days off and breaks. (We will attempt to make the calendar reflect this fact, but please trust this statement over the calendar.)

With the Instructors

Instructor office hours are appropriate for discussing technical content and course logistics. They are less appropriate for getting help with a specific assignment; please see TAs for that.

- To book a session with Prof. Sampson, please find a time on this schedule.

- To book a session with Prof. Guidi, please find a time on this schedule.

Using the CS 3410 Infrastructure

The coursework for CS 3410 mainly consists of writing and testing programs in C and RISC-V assembly. You will need to use the course’s provided infrastructure to compile and run these programs.

Course Setup Video

We have provided a video tutorial detailing how to get started with the course infrastructure. Feel free to read the instructions below instead—they are identical to what the video describes.

Setting Up with Docker

This semester, you will use a Docker container that comes with all of the infrastructure you will need to run your programs.

The first step is to install Docker. Docker has instructions for installing it on Windows, macOS, and on various Linux distributions. Follow the instructions on those pages to get Docker up and running.

For Windows users: to type the commands in these pages, you can choose to use either the Windows Subsystem for Linux (WSL) or PowerShell. PowerShell comes built in, but you have to install WSL yourself. On the other hand, WSL lets your computer emulate a Unix environment, so you can use more commands as written. If you don’t have a preference, we recommend WSL.

Check your installation by opening your terminal and entering:

docker --version

Now, you’ll want to download the container we’ve set up. Enter this command:

docker pull ghcr.io/sampsyo/cs3410-infra

If you get an error like this: “Cannot connect to the Docker daemon at [path]. Is the docker daemon running?”, you need to ensure that the Docker desktop application is actively running on your machine. Start the application and leave it running in the background before proceeding.

This command will take a while. When it’s done, let’s make sure it works! First, create the world’s tiniest C program by copying and pasting this command into your terminal:

printf '#include <stdio.h>\nint main() { printf("hi!\\n"); }\n' > hi.c

(Or, you can just use a text editor and write a little C program yourself.)

Now, here are two commands that use the Docker container to compile and run your program.

docker run -i --rm -v ${PWD}:/root ghcr.io/sampsyo/cs3410-infra gcc hi.c

docker run -i --rm -v ${PWD}:/root ghcr.io/sampsyo/cs3410-infra qemu a.out

If your terminal prints “hi!” then you’re good to go!

You won’t need to learn Docker to do your work in this course. But to explain what’s going on here:

docker run [OPTIONS] ghcr.io/sampsyo/cs3410-infra [COMMAND]tells Docker to run a given command in the CS 3410 infrastructure container.- Docker’s

-ioption makes sure that the command is interactive, in case you need to interact with whatever’s going on inside the container, and--rmtells it not to keep around an “image” of the container after the command finishes (which we definitely don’t need). -v ${PWD}:/rootuses a Docker volume to give the container access to your files, likehi.c.

After all that, the important part is the actual command we’re running.

gcc hi.c compiles the C program (using GCC) to a RISC-V executable called a.out.

Then, qemu a.out runs that program (using QEMU).

Make an rv Alias

The Docker command above is a lot to type every time! To make this easier, we can use a shell alias.

On macOS, Linux, and WSL

Try copying and pasting this command:

alias rv='docker run -i --rm -v "$PWD":/root ghcr.io/sampsyo/cs3410-infra'

Now you can use much shorter commands to compile and run code.

Just put rv before the command you want to run, like this:

rv gcc hi.c

rv qemu a.out

Unfortunately, this alias will only last for your current terminal session.

To make it stick around when you open a new terminal window, you will need to add the alias rv=... command to your shell’s configuration file.

First type this command to find out which shell you’re using:

echo $SHELL

It’s probably bash or zsh, in which case you need to edit .bashrc or .zshrc in your home directory.

Here is a command you can copy and paste, but fill in the appropriate file according to your shell:

echo "alias rv='docker run -i --rm -v \"\$PWD\":/root ghcr.io/sampsyo/cs3410-infra'" >> ~/.bashrc

Change that ~/.bashrc at the end to ~/.zshrc if your shell is zsh.

On Windows with PowerShell (Not WSL)

(Remember, if you’re using WSL on Windows, please use the previous section.)

In PowerShell, we will create a shell function instead of an alias.

We assume that you have created a cs3410 directory on your computer where you’ll be storing all your code files.

First, open Windows PowerShell ISE (not the plain PowerShell) by typing it into the Windows search bar.

There will be an editor component at the top, right under Untitled1.ps1.

There, paste the following:

Function rv_d { docker run -i --rm -v ${PWD}:/root ghcr.io/sampsyo/cs3410-infra $args[0] $args[1] }

This will create a function called rv_d that takes two arguments (we’ll see what those are in a bit). We’re naming it rv_d and not just rv (as done in the next section) because PowerShell already has a definition for rv. The “d” stands for Docker.

Then, in the top left corner, click “File → Save As” and name your creation. Here, we’ll use function_rv_d. Finally, navigate to the cs3410 folder that stores all your work and once you’re there, hit “Save.”

Assuming you don’t delete it, that file will forever be there. This is how we put it to work:

Every time you’d like to run those long docker commands, open PowerShell (the plain one, not the ISE) and navigate to your cs3410 folder. Then, enter the following command:

. .\function_rv_d.ps1

This will run the code in that script file, therefore defining the rv_d function in your current PowerShell session. Then, navigate to wherever the .c file you’re working on is located (we assume it’s called file.c) and to compile it, simply type rv_d gcc file.c. Finally, to run the compiled code, enter rv_d qemu a.out. Try it out with your hi.c file.

Debugging C Code

GDB is an incredibly useful tool for debugging C code. It allows you to see where errors happen and step through your code one line at a time, with the ability to see values of variables along the way. Learning how to use GDB effectively will be very important to you in this course.

Entering GDB Commandline Mode

First, make sure to compile your source files with the -g flag. This flag will add debugging symbols to the executable that will allow GDB to debug much more effectively. For example, running:

rv gcc -g -Wall -Wextra -Wpedantic -Wshadow -Wformat=2 -std=c17 hi.c

In order to use gdb in the 3410 container, you need to open two terminals: one for running qemu with the debug mode in the background; and the other for invoking gdb and iteract with it.

- First, open a new terminal, and type the following commands:

docker run -i --rm -v `pwd`:/root --name cs3410 ghcr.io/sampsyo/cs3410-infra:latest. Feel free the change the “name” fromcs3410to any name you prefer.rv gcc -g -Wall ... (more flags) EXECUTABLE SOURCE.c. Once you have entered the container, compile your source file with the-gflag and any other recommended commands.qemu -g 1234 EXECUTABLE ARG1 ... (more arguments). Now you can start executingqemuwith the debug mode and invoke the executable fileEXECUTABLEwith any arguments you need to pass in.

- Then, open another terminal, and type the following commands:

docker exec -i cs3410 /bin/bash, wherecs3410is the placeholder for the name of the container you are running in the background via the first terminal.gdb --args EXECUTABLE ARG1 ... (more arguments)to start executing the GDB.target remote localhost:1234: execute this inside the GDB. It instructs GDB to perform remote debugging by connecting it to listen to the specified port.- Start debugging!

- Once you

quita GDB session, you need to go back to the first terminal to spin up theqemuagain (Step 1.3) and then invoke GDB again (Step 2.2 and onwards).

Checking for Common C Errors

Here are some important limitations of this method:

- You’ll have to run that script file every time you open a new PowerShell session.

- This function assumes you’ll only be using it to execute

rv_d gcc file.candrv_d qemu a.out(wherefile.canda.outare the.cfile and corresponding executable in question). For anything else, thisrv_dfunction doesn’t work. For those, you’d have to type in the entire Docker command and then whatever else after. Another incentive to go the WSL route.

Set Up Visual Studio Code

You can use any text editor you like in CS 3410. If you don’t know what to pick, many students like Visual Studio Code, which is affectionately known as VSCode.

It’s completely optional, but you might want to use VSCode’s code completion and diagnostics. Here are some suggestions:

- Install VSCode’s C/C++ extension. There is a guide to installing it in the docs.

- Configure VSCode to use the container. Put the contents of this file in

.devcontainer/devcontainer.jsoninside the directory where you’re doing your work for a given assignment. - Tell VSCode to use the RISC-V setup. Put the contents of this file in

.vscode/c_cpp_properties.jsonin your work directory.

Tools

This section contains some tutorial-level overviews of some tools you will use in CS 3410: SSH for remote login, the Unix shell and navigating the command line, and git for version control.

Unix Shell Tutorial

This is a modified version of Tutorials 1 and 2 of a Unix tutorial from the University of Surrey.

Listing Files and Directories

When you first open a terminal window, your current working directory is your home directory. To find out what files are in your home directory, type:

$ ls

(As with all examples in these pages, the $ is not part of the command.

It is meant to evoke the shell’s prompt, and you should type only the characters that come after it.)

There may be no files visible in your home directory, in which case you’ll just see another prompt.

By default, ls will skip some hidden files.

Hidden files are not special: they just have filenames that begin with a . character.

Hidden files usually contain configurations or other files meant to be read by programs instead of directly by humans.

To see everything, including the hidden files, use:

$ ls -a

ls is an example of a command which can take options, a.k.a. flags.

-a is an example of an option. The options change the behavior of the command. There are online manual pages that tell you which options a particular command can take, and how each option modifies the behavior of the command. (See later in this tutorial.)

Making Directories

We will now make a subdirectory in your home directory to hold the files you will be creating and using in the course of this tutorial. To make a subdirectory called “unixstuff” in your current working directory type:

$ mkdir unixstuff

To see the directory you have just created, type:

$ ls

Changing Directories

The command cd [directory] changes the current working directory to [directory].

The current working directory may be thought of as the directory you are in, i.e., your current position in the file-system tree.

To change to the directory you have just made, type:

$ cd unixstuff

Type ls to see the contents (which should be empty).

Exercise.

Make another directory inside unixstuff called backups.

The directories . and ..

Still in the unixstuff directory, type

$ ls -a

As you can see, in the unixstuff directory (and in all other directories), there are two special directories called . and ...

In UNIX, . means the current directory, so typing:

$ cd .

(with is a space between cd and .) means stay where you are (the unixstuff directory). This may not seem very useful at first, but using . as the name of the current directory will save a lot of typing, as we shall see later in the tutorial.

In UNIX, .. means the parent directory. So typing:

$ cd ..

will take you one directory up the hierarchy (back to your home directory). Try it now!

Typing cd with no argument always returns you to your home directory. This is very useful if you are lost in the file system.

Pathnames

Pathnames enable you to work out where you are in relation to the whole file-system. For example, to find out the absolute pathname of your home-directory, type cd to get back to your home-directory and then type:

$ pwd

pwd means “print working directory”. The full pathname will look something like this:

/home/youruser/unixstuff

which means that unixstuff is inside youruser (your home directory), which is in turn in a directory called home, which is in the “root” top-level directory, called /.

Exercise.

Use the commands ls, cd, and pwd to explore the file system.

Understanding Pathnames

First, type cd to get back to your home-directory, then type

$ ls unixstuff

to list the conents of your unixstuff directory.

Now type

$ ls backups

You will get a message like this -

backups: No such file or directory

The reason is, backups is not in your current working directory. To use a command on a file (or directory) not in the current working directory (the directory you are currently in), you must either cd to the correct directory, or specify its full pathname. To list the contents of your backups directory, you must type

$ ls unixstuff/backups

You can refer to your home directory with the tilde ~ character. It can be used to specify paths starting at your home directory. So typing

$ ls ~/unixstuff

will list the contents of your unixstuff directory, no matter where you currently are in the file system.

Summary

| Command | Meaning |

|---|---|

ls | list files and directories |

ls -a | list all files and directories |

mkdir | make a directory |

cd directory | change to named directory |

cd | change to home directory |

cd ~ | change to home directory |

cd .. | change to parent directory |

pwd | display the path of the current directory |

Copying Files

cp [file1] [file2] makes a copy of file1 in the current working directory and calls it file2.

We will now download a file from the Web so we can copy it around.

First, cd to your unixstuff directory:

$ cd ~/unixstuff

Then, type:

$ curl -O https://www.cs.cornell.edu/robots.txt

The curl command puts this text file into a new file called robots.txt.

Now type cp robots.txt robots.bak to create a copy.

Moving Files

mv [file1] [file2] moves (or renames) file1 to file2.

To move a file from one place to another, use the mv command. This has the effect of moving rather than copying the file, so you end up with only one file rather than two. It can also be used to rename a file, by moving the file to the same directory, but giving it a different name.

We are now going to move the file robots.bak to your backup directory.

First, change directories to your unixstuff directory (can you remember how?). Then, inside the unixstuff directory, type:

$ mv robots.bak backups/robots.bak

Type ls and ls backups to see if it has worked.

Removing files and directories

To delete (remove) a file, use the rm command. As an example, we are going to create a copy of the robots.txt file then delete it.

Inside your unixstuff directory, type:

$ cp robots.txt tempfile.txt

$ ls

$ rm tempfile.txt

$ ls

You can use the rmdir command to remove a directory (make sure it is empty first). Try to remove the backups directory. You will not be able to since UNIX will not let you remove a non-empty directory.

Exercise.

Create a directory called tempstuff using mkdir , then remove it using the rmdir command.

Displaying the contents of a file on the screen

Before you start the next section, you may like to clear the terminal window of the previous commands so the output of the following commands can be clearly understood. At the prompt, type:

$ clear

This will clear all text and leave you with the $ prompt at the top of the window.

The command cat can be used to display the contents of a file on the screen. Type:

$ cat robots.txt

As you can see, the file is longer than than the size of the window, so it scrolls past making it unreadable.

The command less writes the contents of a file onto the screen a page at a time. Type:

$ less robots.txt

Press the [space-bar] if you want to see another page, and type [q] if you want to quit reading.

The head command writes the first ten lines of a file to the screen.

First clear the screen, then type:

$ head robots.txt

Then type:

$ head -5 robots.txt

What difference did the -5 do to the head command?

The tail command writes the last ten lines of a file to the screen. Clear the

screen and type:

$ tail robots.txt

Exercise. How can you view the last 15 lines of the file?

Searching the Contents of a File

Using less, you can search though a text file for a keyword (pattern). For example, to search through robots.txt for the word “jpeg”, type

$ less robots.txt

then, still in less, type a forward slash [/] followed by the word to search

/jpeg

As you can see, less finds and highlights the keyword. Type [n] to search for the next occurrence of the word.

grep is one of many standard UNIX utilities. It searches files for specified words or patterns. First clear the screen, then type:

$ grep jpeg robots.txt

As you can see, grep has printed out each line containing the word “jpeg”.

To search for a phrase or pattern, you must enclose it in single quotes (the apostrophe symbol). For example to search for spinning top, type

$ grep 'web crawlers' robots.txt

Some of the other options of grep are:

-v: display those lines that do NOT match-n: precede each matching line with the line number-c: print only the total count of matched lines

Summary

| Command | Meaning |

|---|---|

cp file1 file2 | copy file1 and call it file2 |

mv file1 file2 | move or rename file1 to file2 |

rm file | remove a file |

rmdir directory | remove a directory |

cat file | display a file |

less file | display a file a page at a time |

head file | display the first few lines of a file |

tail file | display the last few lines of a file |

grep 'keyword' file | search a file for keywords |

Don’t stop here! We highly recommend completing the online UNIX tutorial, beginning with Tutorial 3.

Manual Pages

Unix has a built-in “help system” for showing documentation about commands, called man.

Try typing this:

$ man grep

That command launches less to read more than you ever wanted to know about the grep command.

If you want to know how to use a given command, try man <that_command>.

Saving Time on the Command Line

Tab completion is an extremely handy service available on the command line.

It can save you time and frustration by avoiding retyping filenames all the time.

Say you want to run this command to find all the occurrences of “gif” in robots.txt:

$ grep gif robots.txt

Try just typing part of the command first:

$ grep gif ro

Then hit the [tab] key.

Your shell should complete the name of the robots.txt file.

History

Type history at the command line to see your command history.

$ history

The Up Arrow

Use the up arrow on the command line instead of re-typing your most recent command. Want the command before that? Type the up arrow again!

Try it out! Hit the up arrow! If you’ve been stepping through these tips, you’ll probably see the command history.

Ctrl+r

If you need to find a command you typed 10 commands ago, instead of typing the up arrow 10 times, hold the [control] key and type [r]. Then, type a few characters contained within the command you’re looking for. Ctrl+r will reverse search your history for the most recent command that has that string.

Try it out! Assuming you’ve been working your way through all these tutorials,

typing Ctrl+r and then grep will show you your last grep command.

Hit return to execute that command again.

Git

Git is an extremely popular tool for software version control. Its primary purpose is to track your work, ensuring that as you make incremental changes to files, you will always be able to revert to, see, and combine old versions. When combined with a remote repository (in our case GitHub), it also ensures that you have an online backup of your work. Git is also a very effective way for multiple people to work together: collaborators can upload their work to a shared repository. (It certainly beats emailing versions back and forth.)

In CS 3410, we will use git as a way of disseminating assignment files to students and as a way for you to transfer, store, and backup your work. Please work in the class git repository that is created for you and not a repository of your own. (Publishing your code to a public repository is a violation of academic integrity rules.)

A good place to start when learning git is the free Pro Git book. This reference page will provide only a very basic intro to the most essential features of git.

Installing Git

If you do not have git installed on your own laptop, you can install it from the official website. If you encounter any problems, ask a TA.

Activate your Cornell GitHub Account

Before we can create a repository for you in this class, we will need you to activate your Cornell github account. Go to https://github.coecis.cornell.edu and log in with your Cornell NetID and password.

Create a Repository

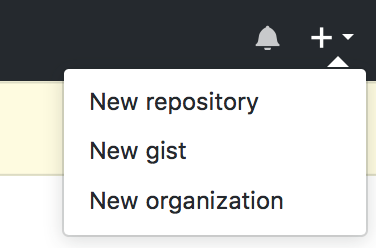

Create a new repository on GitHub: Go to the top right of the GitHub home page, where you’ll see a bell, a plus sign, and your profile icon (which is likely just a pixely patterned square unless you uploaded your own). Click on the downward pointing triangle to the right of the plus sign, and you’ll see a drop-down menu that looks like this:

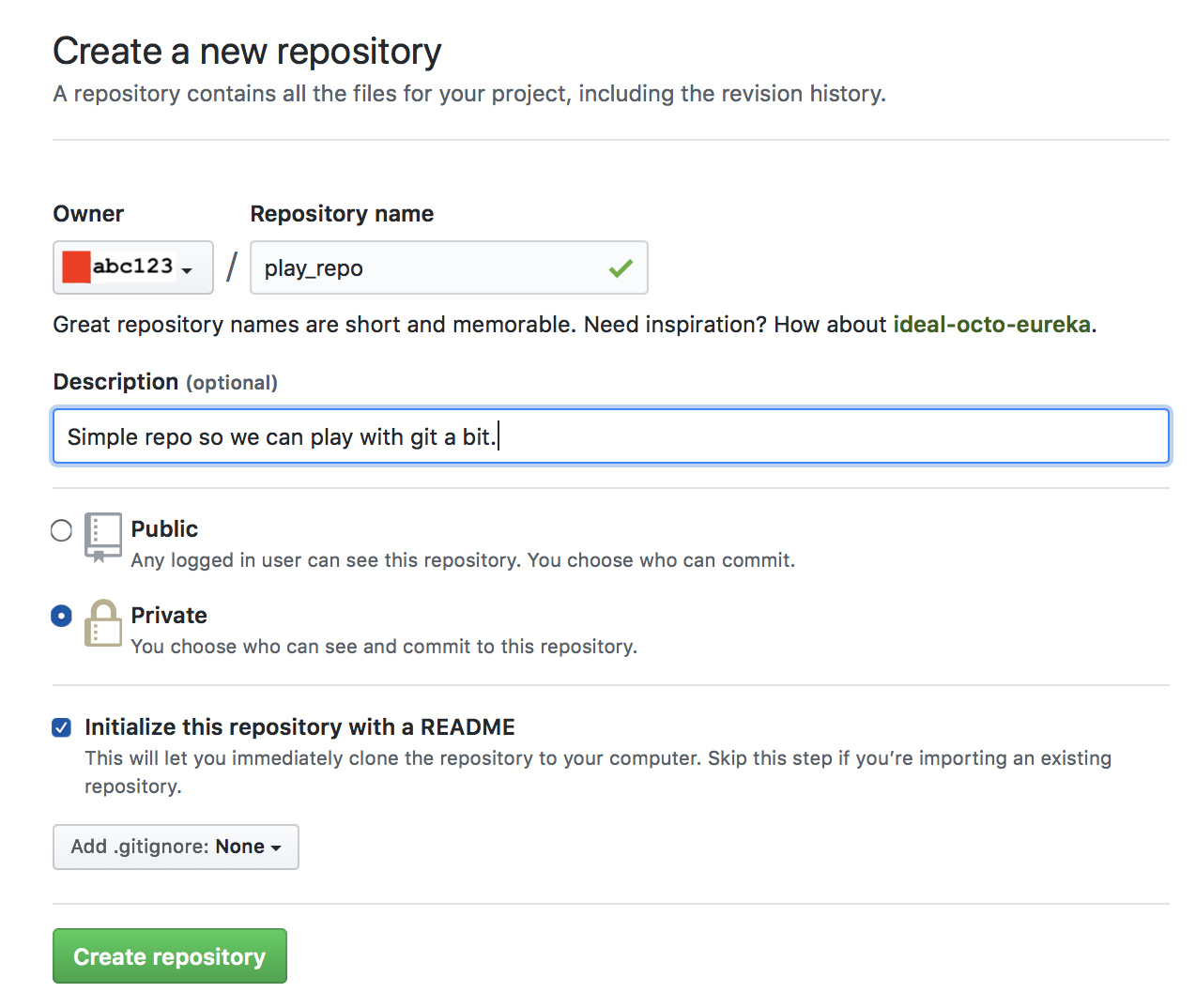

Click on “New repository” and then create a new repository like this:

Note that the default setting is to make your repository public (visible to everyone). Any repository that contains code for this course should be made private; a public repository shares your code with others which constitutes an academic integrity violation.

Now click on the green “Create Repository” button.

Set Up Credentials

Before you can clone your repository (get a local copy to work on), you will need to set up SSH credentials with GitHub.

First, generate an SSH key if you don’t already have one. Just type this command:

$ ssh-keygen -C "<netid>@cornell.edu"

and use your NetID. The prompts will let you protect your key with a passphrase if you want.

Next, follow the instructions from GitHub to add the new SSH key to your GitHub account.

To summarize, go to Settings -> SSH and GPG Keys -> New SSH key,

and then paste the contents of a file named something like ~/.ssh/id_rsa.pub.

Clone the Repository

Cloning a git repository means that you create a local copy of its contents. You should clone the repository onto your own local machine (lab computer or laptop).

Find the green button on the right side of the GitHub webpage for your repository that says “Code”. Click it, then choose the “SSH” tab. Copy the URL there, which will look like this:

git@github.coecis.cornell.edu:abc123/play_repo.git

In a terminal, navigate to the folder where you would like to put your repository, and type:

$ git clone <PASTE>

That is, just type git clone (then a space) and paste the URL from GitHub.

Run this command to download the repository from GitHub to your computer.

At this point, you’ll get authentication errors if your SSH key isn’t set up correctly. So try that again if you get messages like “Please make sure you have the correct access rights and the repository exists.”

Look Around

Type cd play_repo to enter the repository. Type ls and you’ll see that your repo currently has just one file in it called README.md.

Type git status to see an overview of your repository. This command will show the status of your repository and the files that you have changed. At first, this command won’t show much.

Tracking Files with Git

There are 3 steps to track a file with git and send it to GitHub: stage, commit, and push.

Stage

To try it out, let’s make a new file.

Create a new file called <netid>.txt (use your NetID in there).

Now type git add NetID.txt from the directory containing the file to stage the file.

Staging informs git of the existence of the file so it can track its changes.

Type git status again.

You will see the file you added highlighted in green.

This means that the file is staged, but we still have two more steps to go to send your changes to GitHub.

(You might consider going back to the GitHub web interface to confirm that your new <netid>.txt file doesn’t show up there yet.)

Commit

A commit is a record of the state of the repository at a specific time. To make a commit, run this command:

$ git commit -m "Added my favorite color!"

The message after -m is a commit message, which is an explanation of the changes that you have made since you last committed. Good commit messages help you keep track of the work you’ve done.

This commit is now on your local computer. Try refreshing the GitHub repository page to confirm that it’s still not on the remote repository.

Push

To send our changes to the server, type this:

$ git push

The git push command sends any commits you have on your local machine to the remote machine.

You should imagine you are pushing them over the internet to GitHub’s servers.

Try refreshing the GitHub repository page again—now you should see your file there!

Pull

You will also want to retrieve changes from the remote server. This is especially helpful if you work on the repository from different machines. Type this command:

$ git pull

For now, this should just say that everything’s up to date. But if there were any new changes on the server, this would download them.

Typical Usage Pattern

Here is a good git workflow you should follow:

git pull: Type this before you start working to make sure you’re working on the most up to date version of your code (also in case the staff had to push any updates to you).- Work on your files.

git add file.txt: Type this for each file you either modified or added to the repo while you were working. Not sure what you touched or what’s new? Typegit statusand git will tell you!git commit -m "very helpful commit message": Save your changes in a commit. Write a message to remind your future self what you did.git push: Remember that, without the push, the changes are only your machine. If your laptop falls in a lake, then they’re gone forever. Push them to the server for safekeeping.

Git can be a little overwhelming, and sometimes the error messages can be hard to understand. Most of the time, following the instructions git gives you will help; if you run into real trouble, though, please ask a TA. If things get really messed up, don’t be afraid to clone a new copy of your repository and go from there.

It is completely OK to only know a few of the most common git commands and to not really understand how the whole thing works.

Many professional programmers get immense value out of git while only ever using add, commit, push, and pull.

Don’t worry about learning everything about git up front—you are already ready to use it productively!

Even More Commands

Here are a few other commands you might find useful. This is far from everything—there is a lot more in the git documentation.

Log

Type this command:

$ git log <netid>.txt

You’ll see the history of README.md.

You will see the author, time, and commit message for every commit of this file, along with the commit hash, which is how Git labels your commits and how you reference them if you need to.

At this point, you’ll only see a single commit.

But if you were to change the file and run git commit again, you would see the new change in the log.

You can also type git log with no filename afterward to get a history of all commits in your entire repository.

Stash

If you want to revert to the state of the last commit after making some new changes, you can type git stash.

Stashed changes are retrievable, but it might be a hassle to do so.

git stash only works on changes that have not yet been committed.

If you accidentally commit a change and want to wipe it out before pulling work from other machines, use git reset HEAD~1 to undo the last commit (and then stash).

Introduction to SSH

SSH (Secure SHell) is a tool that lets you connect to another computer over the Internet to run commands on it.

You run the ssh command in your terminal to use it.

The Cornell CS department has several machines available to you, if you want to use them to do your work. SSH is the (only) way to connect to these machines.

Accessing Cornell Resources from Off Campus

Cornell’s network requires you to be on campus to connect to Cornell machines. (This is a security measure: it is meant to prevents attacks from off campus.)

To access Cornell machines when you’re elsewhere, Cornell provides a mechanism called a Virtual Private Network (VPN) that lets you pretend to be on campus. Read more about Cornell’s VPN if you need it.

Log On

Make sure you are connected to the VPN or Cornell’s WiFi. Open a terminal window and type:

ssh <netid>@ugclinux.cs.cornell.edu

but replace <>).

Type yes and hit enter to accept the new SSH host key.

Now type your NetID password.

You’re in! You should see a shell prompt; you can follow the Unix shell tutorial to learn how to use it.

Here, ugclinux.cs.cornell.edu is the name of a collection of servers that Cornell runs for this purpose.

That’s what you’d replace with a different domain name to connect to a different machine.

scp

Suppose you have a file on the ugclinux machines and you want to get a copy locally onto your machine.

The scp command can do this.

It works like a super-powered version of the cp command that can copy between machines.

Say your file game.c is located at /home/yourNetID/mygame/game.c on ugclinux.

On your local machine (i.e., when not connected over SSH already), type:

$ scp yourNetID@ugclinux.cs.cornell.edu:mygame/game.c .

Here are the parts of that command:

$ scp <user>@<host>:<source> <dest>

<user> and <host> are the same information you use to connect to the remote machine with the ssh command.

<source> is the file on that remote machine that you want to obtain,

and <dest> is the place where you want to copy that file to.

Makefile Basics

This document is meant to serve as a very brief reference on how to read the Makefiles provided in this class. This tutorial is meant to be just enough to help you read the Makefiles you provide, and is not meant to be a complete overview of Makefiles or enough to help you make your own. If you are interested in learning more, there are some good tutorials online, such as this walkthrough.

A Makefile is often used with C to help with automating the (repetitive) task of compiling multiple files. This is especially helpful in cases where there are multiple pieces of your codebase you want to compile separately, such as choosing to test a program or run that program.

Variables

To illustrate how this works, let us examine a few lines in the Makefile that will be used for the minifloat assignment. Our first line of code is to define a variable CFLAGS:

CFLAGS=-Wall -Wpedantic -Werror -Wshadow -Wformat=2 -Wconversion -std=c99

As in other settings, defining this variable CFLAGS allows us to use the contents (a string in this case) later in our Makefile. Our specific choice of CFLAGS here is to indicate that we are defining the flags (for C) that we will be using in this Makefile. Later, when we use this variable in-line, the Makefile will simply replace the variable with whatever we defined it as, thus allowing us to use the same flags consistently for every command we run.

Commands

The rest of our Makefile for this assignment will consist of commands. A command has the following structure:

name: dependent_files

operation_to_run

The name of a command is what you run in your terminal after make, such as make part1 or make all (this gets a bit more complicated in some cases). The dependent_files indicate which files this command depends on – the Makefile will only run this command if one of these files changed since the last time we ran it. Finally, the operation is what actually gets run in our console, such as when we run gcc main.c -o main.o.

Example Command

To make this more concrete, let us examine our first command for part1:

part1: minifloat.c minifloat_test_part1.c minifloat_test_part1.expected

$(CC) $(CFLAGS) minifloat.c minifloat_test_part1.c -o minifloat_test_part1.out

This command will execute when we run make part1, but only if one of minifloat.c, minifloat_test_part1.c or minifloat_test_part1.expected have been modified since we last ran this command. What actually runs is the next line, with the $(CC), $(CFLAGS), and a bunch of filenames. $(CC) is a standard Makefile variable that is replaced by our C compiler – in our case, this is gcc. The $(CFLAGS) variable here is what we defined earlier, so we include all of the flags we desired. Finally, the list of files is exactly the same as we might normally run with gcc. In total, then, this entire operation will be translated to:

$(CC) $(CFLAGS) minifloat.c minifloat_test_part1.c -o minifloat_test_part1.out

-->

gcc $(CFLAGS) minifloat.c minifloat_test_part1.c -o minifloat_test_part1.out

-->

gcc -Wall -Wpedantic -Werror -Wshadow -Wformat=2 -Wconversion -std=c99 minifloat.c minifloat_test_part1.c -o minifloat_test_part1.out

This compilation would be a huge pain to type out everytime, especially with all of those flags (and easy to mess up), but with the Makefile, we can run all this with just make part1. We can do the same with make part2 to run the next set of commands instead.

Clean

One final node is that it is conventional (though not required) to include a make clean that removes any generated files, often for being able to clean up our folder or push our work to a Git repository. In our particular file, we have defined clean to remove the generated .out files and any .txt files that were used for testing:

clean:

rm -f *.out.stackdump

rm -f *.out

rm -f *.txt

Complete Makefile

For reference, the entirity of our Makefile is included here:

CFLAGS=-Wall -Wpedantic -Werror -Wshadow -Wformat=2 -Wconversion -std=c99

CC = gcc

all: part1 part2 part3

part1: minifloat.c minifloat_test_part1.c minifloat_test_part1.expected

$(CC) $(CFLAGS) minifloat.c minifloat_test_part1.c -o minifloat_test_part1.out

part2: minifloat.c minifloat_test_part2.c

$(CC) $(CFLAGS) minifloat.c minifloat_test_part2.c -o minifloat_test_part2.out

part3: minifloat.c minifloat_test_part3.c

$(CC) $(CFLAGS) minifloat.c minifloat_test_part3.c -o minifloat_test_part3.out

clean:

rm -f *.out.stackdump

rm -f *.out

rm -f *.txt

.PHONY: all clean

C Programming

Much of the work in CS 3410 involves programming in C. This section of the site contains some overviews of most of the C features you will need in CS 3410.

For authoritative details on C and its standard library,

the C reference on cppreference.com (despite the name) is a good place to look.

For example, here’s a list of all the functions in the stdio.h header, and here’s the documentation specifically about the fputs function.

Compiling and Running C Code

Before you proceed with this page, follow the instructions to set up the course’s RISC-V infrastructure.

Your First C Program

Copy and paste this program into a text file called first.c:

#include <stdio.h>

int main() {

printf("Hello, CS 3410!\n");

return 0;

}

Next, run this command:

$ rv gcc -o first first.c

Here are some things to keep in mind whenever these pages ask you to run a command:

- The

$is not part of the command. This is meant to evoke the command-line prompt in many shells, and it is there to indicate to you that the text that follows is a command that you should run. Do not include the$when you type the command. - Our course’s RISC-V infrastructure setup has you create an

rvalias for running commands inside the infrastructure container. We will not always include anrvprefix on example commands we list in these pages. Whenever you need to run a tool that comes from the container, use thervprefix or some other mechanism to make sure the command runs in the container. - As with all shell commands, it really matters which directory you’re currently “standing in,” called the working directory. Here,

first.candfirstare both filenames that implicitly refer to files within the working directory. So before running this command, be sure tocdto the place where yourfirst.cfile exists.

If everything worked, you can now run this program with this command:

$ rv qemu first

Hello, CS 3410!

(Just type the rv qemu first part. The next line, without the $, is meant to show you what the command should print as output after you hit return.)

This command uses QEMU, an emulator for the RISC-V instruction set, to run the program we just compiled, which is in the file named first.

Recommended Options

While the simple command gcc -o first first.c works fine for this simple example, we officially recommend that you always use a few additional command-line options that make the GCC compiler more helpful.

Here are the ones we recommend:

-Wall -Wextra -Wpedantic -Wshadow -Wformat=2 -std=c17

In other words, here’s our complete recommended command for compiling your C code:

$ rv gcc -Wall -Wextra -Wpedantic -Wshadow -Wformat=2 -std=c17 hi.c

Many assignments will include a Makefile that supplies these options for you.

Checking for Common C Errors

Memory-related bugs in C programs are extremely common! The worst thing about them is that they can cause obscure problems silently, without even crashing with a reasonable error message. Fortunately, GCC has built-in tools called sanitizers that can (much of the time, but not always) catch these bugs and give you reasonable error messages.

To use the sanitizers, add these flags to your compiler command:

-g -fsanitize=address -fsanitize=undefined

So here’s a complete compiler command with sanitizers enabled:

$ rv gcc -Wall -Wextra -Wpedantic -Wshadow -Wformat=2 -std=c17 -g -fsanitize=address -fsanitize=undefined hi.c

Then run the resulting program to check for errors.

We recommend trying the sanitizers whenever your code does something mysterious or unpredictable. It’s an unfortunate fact of life that, unlike many other languages, bugs in C code can silently cause weird behavior; sanitizers can help counteract this deeply frustrating problem.

C Basics

This section is an overview of the basic constructs in any C program.

Variable Declarations

C is a statically typed languages, so when you declare a variable, you must also declare its type.

int x;

int y;

Variable declarations contain the type (int in this example) and the variable name (x and y in this example).

Like every statement in C, they end with a semicolon.

Assignment

Use = to assign new values to variables:

int x;

x = 4;

As a shorthand, you can also include the assignment in the same statement as the declaration:

int y = 6;

Expressions

An expression is a part of the code that evaluates to a value, like 10 or 7 * (4 + 2) or 3 - x.

Expressions appear in many places, including on the right-hand side of an = in an assignment.

Here are a few examples:

int x;

x = 4 + 3 * 2;

int y = x - 6;

x = x * y;

Functions

To define a function, you need to write these things, in order: the return type, the function name, the parameter list (each with a type and a name), and then the body. The syntax looks like this:

<return type> <name>(<parameter type> <parameter name>, ...) {

<body>

}

Here’s an example:

int myfunc(int x, int y) {

int z = x - 2 * y;

return z * x;

}

Function calls look like many other languages:

you write the function name and then, in parentheses, the arguments.

For example, you can call the function above using an expression like myfunc(10, 4).

The main Function

Complete programs must have a main function, which is the first one that will get called when the program starts up.

main should always have a return type of int.

It can optionally have arguments for command-line arguments (covered later).

Here’s a complete program:

int myfunc(int x, int y) {

int z = x - 2 * y;

return z * x;

}

int main() {

int z = myfunc(1, 2);

return 0;

}

The return value for main is the program’s exit status.

As a convention, an exit status of 0 means “success” and any nonzero number means some kind of exceptional condition.

So, most of the time, use return 0 in your main.

Includes

To use functions declared somewhere else, including in the standard library, C uses include directives. They look like this:

#include <hello.h>

#include "goodbye.h"

In either form, we’re supplying the filename of a header file.

Header files contain declarations for functions and variables that C programs can use.

The standard filename extension for header files in C is .h.

You should use the angle-bracket version for library headers and the quotation-mark version for header files you write yourself.

Printing

To print output to the console, use printf, a function from the C standard library which takes:

- A string to print out, which may include format specifiers (more on these in a moment).

- For each format specifier, a value to fill in for each format specifier.

The first string might have no format specifiers at all, in which case the printf only has a single argument.

Here’s what that looks like:

#include <stdio.h>

int main() {

printf("Hello, world!\n");

}

The \n part is an escape sequence that indicates a newline, i.e., it makes sure the next thing we output goes on the next line.

Format specifiers start with a % sign and include a few more characters describing how to print each additional argument.

For example, %d prints a given argument as a decimal integer.

Here’s an example:

#include <stdio.h>

int main() {

int x = 3;

int y = 4;

printf("x + y = %d.\n", x + y);

}

Here are some format specifiers for printing integers in different bases:

| Base | Format Specifier | Example |

|---|---|---|

| decimal | %d | printf("%d", i); |

| hexadecimal | %x | printf("%x", i); |

| octal | %o | printf("%o", i); |

And here are some common format specifiers for other data types:

| Data Type | Format Specifier | Example |

|---|---|---|

string | %s | printf("%s", str); |

char | %c | printf("%c", c); |

float | %f | printf("%f", f); |

double | %lf | printf("%lf", d); |

long | %ld | printf("%ld", l); |

long long | %lld | printf("%lld", ll); |

| pointers | %p | printf("%p", ptr); |

See the C reference for details on the full set of available format specifiers.

Basic Types in C

Some Common Data Types

| Type | Common Size in Bytes | Interpretation |

|---|---|---|

char | 1 | one ASCII character |

int | 4 | signed integer |

float | 4 | single-precision floating-point number |

double | 8 | double-precision floating-point number |

A surprising quirk about C is that the sizes of some types can be different in different compilers and platforms! So this table lists common byte sizes for these types on popular platforms.

Characters

Every character is corresponds to a number. The mapping between characters and numbers is called the text encoding, and the ubiquitous one for basic characters in the English language is called ASCII. Here is a table with some of the most common characters in ASCII:

For all the characters in ASCII (and beyond), see this ASCII table.

Booleans

C does not have a bool data type available by default.

Instead, you need to include the stdbool.h header:

#include <stdbool.h>

That lets you use the bool type and the true and false expressions.

If you get an error like unknown type name 'bool', just add the include above to fix it.

Prototypes and Headers

Declare Before Use

In C, the order of declarations matters. This program with two functions works fine:

#include <stdio.h>

void greet(const char* name) {

printf("Hello, %s!\n", name);

}

int main() {

greet("Eva");

return 0;

}

But what happens if you just reverse the two function definitions?

#include <stdio.h>

int main() {

greet("Eva");

return 0;

}

void greet(const char* name) {

printf("Hello, %s!\n", name);

}

The compiler gives us this somewhat confusing error message:

error: implicit declaration of function 'greet'

The problem is that, in C,

you have to declare every name before you can use it.

So the declaration of greet has to come earlier in the file than the call to greet("Eva").

Declarations, a.k.a. Prototypes

This declare-before-use rule can make it awkward to define functions in the order you want, and it seems to be a big problem for mutual recursion. Fortunately, C has a mechanism to let you declare a name before you define what it means. All the functions we’ve seen so far have been definitions (a.k.a. implementations), because they include the body of the function. A function declaration (a.k.a. prototype) looks the same, except that we leave off the body and just write a semicolon instead:

void greet(const char* name);

A declaration like this tells the compiler the name and type of the function, and it amounts to a promise that you will later provide a complete definition.

Here’s a version of our program above that works and keeps the function definition order we want (main and then greet):

#include <stdio.h>

void greet(const char* name);

int main() {

greet("Eva");

return 0;

}

void greet(const char* name) {

printf("Hello, %s!\n", name);

}

By including the declaration at the top of the file, we are now free to call greet even though the definition comes later.

Header Files

It is so common to need to declare a bunch of functions so you can call them later that C has an entire mechanism to facilitate this:

header files.

A header is a C source-code file that contains declarations that are meant to be included in other C files.

You can then “copy and paste” the contents of header files into other C code using the #include directive.

Even though the C language makes no formal distinction between what you can do in headers and in other files, it is a universal convention that headers have the .h filename extension while “implementation” files use the .c extension.

For example, we could put our greet declaration into a utils.h header file:

void greet(const char* name);

Then, we might put this in main.c:

#include <stdio.h>

#include "utils.h"

int main() {

greet("Eva");

return 0;

}

void greet(const char* name) {

printf("Hello, %s!\n", name);

}

The line #include "utils.h" instructs the C preprocessor to look for the file called utils.h and paste its entire contents in at that location.

Because the preprocessor runs before the compiler, this two-file version of our project looks exactly the same to the compiler as if we had merged the two files by hand.

You can read more about #include directives, including about the distinction between angle brackets and quotation marks.

Multiple Source Files

Eventually, your C programs will grow large enough that it’s inconvenient to keep them in one .c file.

You could distribute the contents across several files and then #include them, but there is a better way:

we can compile source files separately and then link them.

To make this work in our example, we will have three files.

First, our header file utils.h, as before, just contains a declaration:

void greet(const char* name);

Next, we’re write an accompanying implementation file, utils.c:

#include <stdio.h>

#include "utils.h"

void greet(const char* name) {

printf("Hello, %s!\n", name);

}

As a convention, C programmers typically write their programs as pairs of files:

a header and an implementation file, with the same base name and different extensions (.h and .c).

The idea is that the header declares exactly the set of functions that the implementation file defines.

So in that way, the header file acts as a short “table of contents” for what exists in the longer implementation file.

Let’s call the final file main.c:

#include "utils.h"

int main() {

greet("Eva");

return 0;

}

Notably, we use #include "utils.h" to “paste in” the declaration of greet, but we don’t have its definition here.

Now, it’s time to compile the two source files, utils.c and main.c.

Here are the commands to do that:

$ gcc -c utils.c -o utils.o

$ gcc -c main.c -o main.o

(Remember to prefix these commands with rv to use our RISC-V infrastructure.)

The -c flag tells the C compiler to just compile the single source file into an object file, not an executable.

An object file contains all code for a single C source program, but it is not directly runnable yet—for one thing, it might not have a main function.

Using -o utils.o tells the compiler to put the output in a file called utils.o.

As a convention, the filename extension for object files is .o.

You’ll notice that we only compiled the .c files, not the .h files.

This is intentional: header files are only for #includeing into other files.

Only the actual implementation files get compiled.

Finally, we need to combine the two object files into an executable. This step is called linking. Here’s how to do that:

$ gcc utils.o main.o -o greeting

We supply the compiler with two object files as input and tell it where to put the resulting executable with -o greeting.

Now you can run the program:

$ ./greeting

(Use rv qemu greeting to use the course RISC-V infrastructure.)

Control Flow

Logical Operators

Here are some logical operators you can use in expressions:

| Expression | True If… |

|---|---|

expr1 == expr2 | expr1 is equal to expr2 |

expr1 != expr2 | expr1 is not equal to expr2 |

expr1 < expr2 | expr1 is less than expr2 |

expr1 <= expr2 | expr1 is less than or equal to expr2 |

expr1 > expr2 | expr1 is greater than expr2 |

expr1 >= expr2 | expr1 is greater than or equal to expr2 |

!expr | expr is false (i.e., zero) |

expr1 && expr2 | expr1 and expr2 are true |

expr1 || expr2 | expr1 or expr2 is true |

false && expr2 will always evaluate to false, and true || expr2 will always evaluate to true, regardless of what expr2 evaluates to.

This is called “short circuiting”: C evaluates the left-hand side of these expressions first and, if the truth value of that expression means that the other one doesn’t matter, it won’t evaluate the right-hand side at all.

Conditionals

Here is the syntax for if/else conditions:

if (condition) {

// code to execute if condition is true

} else if (another_condition) {

// code to execute if condition is false but another_condition is true

} else {

// code to execute otherwise

}

The else if and else parts are optional.

Switch/Case

A switch statement can be a succinct alternative to a cascade of if/elses when you are checking several possibilities for one expression.

switch (expression) {

case constant1:

// code to execute if expression equals constant1

break;

case constant2:

// code to execute if expression equals constant2

break;

// ...

default:

// code to be executed if expression doesn't match any case

}

While Loop

while (condition) {

// code to execute as long as condition is true

}

For Loop

for (initialization; condition; increment) {

// code to execute for each iteration

}

Roughly speaking, this for loop behaves the same way as this while equivalent:

initialization;

while (condition) {

// code to execute for each iteration

increment;

}

break and continue

To exit a loop early, use a break; statement.

A break statement jumps out of the innermost enclosing loop or switch statement.

If the break statement is inside nested contexts, then it exits only the most immediately enclosing one.

To skip the rest of a single iteration of a loop, but not cancel the loop entirely, use continue.

Declaring Your Own Types in C

Structures

The struct keyword lets you declare a type that bundles together several values, possibly of different types.

To access the fields inside a struct variable, use dot syntax, like thing.field.

Here’s an example:

struct rect_t {

int left;

int bottom;

int right;

int top;

};

int main() {

struct rect_t myRect;

myRect.left = -4;

myRect.bottom = 1;

myRect.right = 8;

myRect.top = 6;

printf("Bottom left = (%d,%d)\n", myRect.left, myRect.bottom);

printf("Top right = (%d,%d)\n", myRect.right, myRect.top);

return 0;

}

This program declares a type struct rect_t and then uses a variable myRect of that type.

Enumerations

The enum keyword declares a type that can be one of several options.

Here’s an example:

enum threat_level_t {

LOW,

GUARDED,

ELEVATED,

HIGH,

SEVERE

};

void printOneLevel(enum threat_level_t threat) {

switch (threat) {

case LOW:

printf("Green/Low.\n");

break;

// ...omitted for brevity...

case SEVERE:

printf("Red/Severe.\n");

break;

}

}

void printLevels() {

printf("Threat levels are:\n");

for (int i = LOW; i <= SEVERE; i++) {

printOneLevel(i);

}

}

This code declares a type enum threat_level_t that can be one of 5 values.

Type Aliases

You can use the typedef keyword to give new names to existing types.

Use typedef <old type> <new name>;, like this:

typedef int whole_number;

Now, you can use whole_number to mean the same thing as int.

Short Names for Structs and Enums

You may have noticed that struct and enum declarations make types that are kind of long and hard to type.

For example, we declared a type enum threat_level_t.

Wouldn’t it be nice if this type could just be called threat_level_t?

typedef is also useful for defining these short names.

You could do this:

enum _threat_level_t { ... }

typedef enum _threat_level_t threat_level_t;

And that does work! But there’s also a shorter way to do it, by combining the enum and the typedef together:

typedef enum {

...

} threat_level_t;

That defines an anonymous enumeration and then immediately gives it a sensible name with typedef.

Pointers!

Pointers are central to programming in C, yet are often one of the most foreign concepts to new C coders.

A Motivating Example

Suppose we want to write a swap function that will take two integers and swap their values. With the programming tools we have so far, our function might look something like this:

void swap(int a, int b) {

int temp = a;

a = b;

b = temp;

}

This won’t work how we want!

If we call swap(foo, bar), the swap function gets copies of the values in foo and bar.

Reassigning a and b just affects those copies—not foo and bar themselves!

How can we give swap direct access to the places where the arguments are stored so it can actually swap them?

Pointers are the answer.

Pointers are addresses in memory, and you can think of them as referring to a value that lives somewhere else.

Declaring a Pointer

For any type T, the type of a pointer to a value of that type is T*: that is, the same type with a star after it.

For example, this code:

char* my_char_pointer;

(pronounced “char star my char pointer”) declares a variable with the name my_char_pointer.

This variable is not a char itself!

Instead, it is a pointer to a char.

Confusingly, the spaces don’t matter. The following three lines of code are all equivalent declarations of a pointer to an integer:

int* ptr;

int *ptr;

int * ptr;

ptr has the type “pointer to an integer.”

Initializing a Pointer

int* ptr = NULL;

The line above initializes the pointer to NULL, or zero. It means the pointer does not point to anything. This is a good idea if you don’t plan on having it point to something just yet. Initializing to NULL helps you avoid “dangling” pointers which can point to random memory locations that you wouldn’t want to access unintentionally. C will not do this for you.

You can check if a pointer is NULL with the expression ptr == NULL.

Assigning to a Pointer, and Getting Addresses

In the case of a pointer, changing its value means changing where it points. For example:

void func(int* x) {

int* y = x;

// ...

The assignment in that code makes y and x point to the same place.

But what if you want to point to a variable that already exists?

C has an & operator, called the “address-of” operator, that gets the pointer to a variable.

For example:

int x = 5;

int* xPtr = &x;

Here, xPtr now points to x.

You can’t assign to the address of things; you can only use & in expressions (e.g., on the right-hand side of an assignment).

So:

y = &x; // this is fine

&x = y; // will not compile!

This rule reflects the fact that you can get the location of any variable, but it is never possible to change the location of a variable.

Dereferencing Pointers

Once you have a pointer with a memory location in it, you will want to access the value that is being pointed at—either reading or changing the value in the box at the end of the arrow.

For this, C has the * operator, known as the “dereferencing” operator because it follows a reference (pointer) and gives you the referred-to value.

You can both read from and write to a dereferenced pointer, so * expressions can appear on either side of an assignment.

For example:

int number = *xPtr; // read the value xPtr points to

printf("the number is %d\n", *xPtr); // read it and then print it

*xPtr = 6; // write the value that xPtr points to

Common Confusion with the * Operator

Do not be confused by the two contexts in which you will see the star (*) symbol:

- Declaring a pointer:

int* p; - Dereferencing a pointer (RHS):

r = *p; - Dereferencing a pointer (LHS):

*p = r;

The star is part of the type name when declaring a pointer and is the dereference operator when used in assignments.

Swap with Pointers

Now that we have pointers, we can correctly write that swap function we wanted! The new version of swap uses a “pass by reference” model in which pointers to arguments are passed to the function.

void swap(int* a, int* b) {

int temp = *a;

*a = *b;

*b = temp;

}

The Arrow Operator

Recall that we used the “dot” operator to access elements within a struct, like myRect.left.

If you instead have a pointer to a struct, you need to dereference it first before you can access its fields,

like (*myRect).left.

Fortunately, C has a shorthand for this case!

You can also write myRect->left to mean the same thing.

In other words, the -> operator works like the . operator except that it also dereferences the pointer on the left-hand side.

Pointer Arithmetic

If pointers are just addresses in memory, and addresses are just integers,

you might wonder if you can do arithmetic on them like you can with ints.

Yes, you can!

Adding n to a pointer to any type T causes the pointer to point n Ts further in memory.

For example, the expression ptr + offset might compute a pointer that is “four ints later in memory” or “six chars later in memory.”

int x = 5;

int *ptr = ...;

x = x + 1;

ptr = ptr + 1;

In this code:

x + 1: adds 1 to to the integerx, producing 6ptr + 1: adds the size of anintin bytes toptr, shifting to point to the next integer in memory

Printing Pointers

You can print the address of a pointer to see what memory location it is pointing to. For example:

printf("Pointer address: %p\n", (void*)ptr);

This will output the memory address the pointer ptr is currently holding.

Arrays

An array is a sequence of same-type values that are consecutive in memory.

Declaring an Array

To declare an array, specify its type and size (the number of items in the sequence). For example, an array of 4 integers can be declared as follows:

int myArray[4];

A few variations on this declaration are:

int myArray[4] = {42, 45, 65, -5}; // initializes the values in the array

int myArray[4] = {0}; // initializes all the values in the array to 0

int myArray[] = {42, 45, 65, -5}; // initializes the values in the array, compiler intuits the array size

Accessing an Array

To refer to an element, one must specify the array name (for example, my_array) and the position number (for example, 0):

int my_array[5];

my_array[0] = 8;

printf("I just initialized the element at index 0 to %d!\n", my_array[0]);

After which the array would look like this in memory (where larger addresses are higher on the screen):

To sum the elements of an array, we might write code like this:

int sum_array(int *array, int n) {

int sum = 0;

for (int i = 0; i < n; ++i) {

answer += array[i];

}

return sum;

}

int main() {

int data[4] = {4, 6, 3, 8};

int sum = sum_array(data, 4);

printf("sum: %d\n", sum);

return 0;

}