Peer-Reviewed Papers and Publications

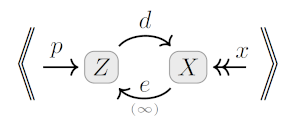

Abstract. We define what it means for a joint probability distribution to be compatible with a set of independent causal mechanisms, at a qualitative level—or, more precisely, with a directed hypergraph A, which is the qualitative structure of a probabilistic dependency graph (PDG). When A represents a qualitative Bayesian network, QIM-compatibility with A reduces to satisfying the appropriate conditional independencies. But giving semantics to hypergraphs using QIM-compatibility lets us do much more. For one thing, we can capture functional dependencies. For another, we can capture important aspects of causality using compatibility: we can use compatibility to understand cyclic causal graphs, and to demonstrate structural compatibility, we must essentially produce a causal model. Finally, QIM-compatibility has deep connections to information theory. Applying compatibility to cyclic structures helps to clarify a longstanding conceptual issue in information theory.

- Abstract. What should you do with conflicting information? To be rational, you must immediately resolve the inconsistency, so as to maintain a consistent (probabilistic) picture of the world. But how? And is it really critical to do so immediately Inconsistency is clearly undesirable, but we stand to gain a lot by representing it. This thesis develops a broad theory of how to approach probabilistic modeling with possibly-inconsistent information, unifying and reframing much of the literature in the process. The key ingredient is a novel kind of graphical model, called a Probabilistic Dependency Graph (PDG), which allows for arbitrary (even conflicting) pieces of probabilistic information. In Part I, we establish PDGs as a generalization of other models of mental state, including traditional graphical models such as Bayesian Networks and Factor Graphs, as well as causal models, and even generalizations of probability distributions, such as Dempster-Shafer Belief functions. In Part II, we show that PDGs also capture modern neural representations. Surprisingly, standard loss functions can be viewed as the inconsistency of a PDG that models the situation appropriately. Furthermore, many important algorithms in AI are instances of a simple approach to resolving inconsistencies. In Part III, we provide algorithms for PDG inference, and uncover a deep algorithmic equivalence between the problems of inference and calculating a PDG’s numerical degree of inconsistency. We also develop powerful yet inutuitive principles for reasoning with (and about) PDGs.

- Mixture LanguagesOliver Richardson and Jialu Bao

POPL 2024 Languages for Inference (LAFI) Workshop

- The Local Inconsistency Resolution AlgorithmOliver Richardson

ICML 2023 Workshop on Structured Probabilistic Inference & Generative Modeling (SPIGM); Workshop on Localized Learning (LLW)

- Inference for Probabilistic Dependency GraphsOliver Richardson, Joseph Halpern, and Christopher De Sa

UAI 2023

Abstract.

In a world blessed with a great diversity of loss functions, we argue that that choice between them is not a matter of taste or pragmatics, but of model. Probabilistic depencency graphs (PDGs) are probabilistic models that come equipped with a measure of "inconsistency". We prove that many standard loss functions arise as the inconsistency of a natural PDG describing the appropriate scenario, and use the same approach to justify a well-known connection between regularizers and priors. We also show that the PDG inconsistency captures a large class of statistical divergences, and detail benefits of thinking of them in this way, including an intuitive visual language for deriving inequalities between them. In variational inference, we find that the ELBO, a somewhat opaque objective for latent variable models, and variants of it arise for free out of uncontroversial modeling assumptions -- as do simple graphical proofs of their corresponding bounds. Finally, we observe that inconsistency becomes the log partition function (free energy) in the setting where PDGs are factor graphs.

Abstract.

In a world blessed with a great diversity of loss functions, we argue that that choice between them is not a matter of taste or pragmatics, but of model. Probabilistic depencency graphs (PDGs) are probabilistic models that come equipped with a measure of "inconsistency". We prove that many standard loss functions arise as the inconsistency of a natural PDG describing the appropriate scenario, and use the same approach to justify a well-known connection between regularizers and priors. We also show that the PDG inconsistency captures a large class of statistical divergences, and detail benefits of thinking of them in this way, including an intuitive visual language for deriving inequalities between them. In variational inference, we find that the ELBO, a somewhat opaque objective for latent variable models, and variants of it arise for free out of uncontroversial modeling assumptions -- as do simple graphical proofs of their corresponding bounds. Finally, we observe that inconsistency becomes the log partition function (free energy) in the setting where PDGs are factor graphs.

- Probabilistic Dependency GraphsOliver Richardson and Joseph Halpern

AAAI 2021

- Complexity and Scale: Understanding the CreativeOliver Richardson

International Association of Computing and Philosophy (IACAP) 2014

- Capitalization in the St Petersburg Game: Why Statistical Distributions MatterMariam Thalos and Oliver Richardson

Politics, Philosophy & Economics 2014

Academic Talks

- The Pursuit of Epistemic Consistency as a "Universal" Objective.

@ ILIAD Conference, 29 Jul 2024. - A Probabilistic Model of Belief, Dependence, and Inconsistency.

B Exam @ Cornell CS Department, 16 Jul 2024. - How to Compute with PDGs: Inference, Inconsistency Measurement, and the Close Relationship Between the Two.

Invited Talk @ Cornell CS Theory Seminar, 19 Feb 2024. - Learning, Inference, and the Pursuit of Consistency.

Invited Talk @ Cornell CS Student Colloquium, 7 Dec 2023. - Probabilistic (In)consistency as a Basis for Learning and Inference.

Invited Talk @ University of Tenessee CS Dept, 29 Nov 2023. - A Tutorial on Bialgebra.

@ Cornell Seminar on Programming Languages, 17 Sep 2022. - Loss as the Inconsistency of a Probabilistic Dependency Graph: Choose Your Model, Not Your Loss Function.

Invited Talk @ Cornell Seminar on Artificial Intelligence, 2 Sep 2022. - Probabilistic Dependency Graphs and Inconsistency: How to Model, Measure, and Mitigate Internal Conflict.

A Exam @ Cornell CS Department, 17 Sep 2021. - Probabilistic Dependency Graphs.

@ Cornell CS Theory Tea, 17 Mar 2021. - Cat Thoughts: An Intro to Categorical Thinking.

@ Cornell Graduate Student Seminar, 20 Feb 2019.