Remember the Bayes Optimal classifier: If we are provided with \(P(X,Y)\) we can predict the most likely label for \(\mathbf{x}\), formally \(\operatorname*{argmax}_y P(y|\mathbf{x})\). It is therefore worth considering if we can estimate \(P(X,Y)\) directly from the training data. If this is possible (to a good approximation) we could then use the Bayes Optimal classifier in practice on our estimate of \(P(X,Y)\).

In fact, many supervised learning can be viewed as estimating \(P(X, Y)\). Generally, they fall into two categories:

Suppose you find a coin and it's ancient and very valuable. Naturally, you ask yourself, "What is the probability that this coin comes up heads when I toss it?" You toss it \(n = 10\) times and obtain the following sequence of outcomes: \(D=\{H, T, T, H, H, H, T, T, T, T\}\). Based on these samples, how would you estimate \(P(H)\)? We observed \(n_H=4\) heads and \(n_T=6\) tails. So, intuitively, $$ P(H) \approx \frac{n_H}{n_H + n_T} = \frac{4}{10}= 0.4 $$ Can we derive this more formally?

The estimator we just mentioned is the Maximum Likelihood Estimate (MLE). For MLE you typically proceed in two steps: First, you make an explicit modeling assumption about what type of distribution your data was sampled from. Second, you set the parameters of this distribution so that the data you observed is as likely as possible.

Let us return to the coin example. A natural assumption about a coin toss is that the distribution of the observed outcomes is a binomial distribution. The binomial distribution has two parameters \(n\) and \(\theta\) and it captures the distribution of \(n\) independent Bernoulli (i.e. binary) random events that have a positive outcome with probability \(\theta\). In our case \(n\) is the number of coin tosses, and \(\theta\) could be the probability of the coin coming up heads (e.g. \(P(H)=\theta\)). Formally, the binomial distribution is defined as \begin{align} P(D\mid \theta) &= \begin{pmatrix} n_H + n_T \\ n_H \end{pmatrix} \theta^{n_H} (1 - \theta)^{n_T}, \end{align} and it computes the probability that we would observe exactly \(n_H\) heads, \(n_T\) tails, if a coin was tossed \(n=n_H+n_T\) times and its probability of coming up heads is \(\theta\).

MLE Principle: Find \(\hat{\theta}\) to maximize the likelihood of the data, \(P(D; \theta)\): \begin{align} \hat{\theta}_{MLE} &= \operatorname*{argmax}_{\theta} \,P(D ; \theta) \end{align}

Often we can solve this maximization problem with a simple two step procedure: 1. plug in all the terms for the distribution, and take the \(\log\) of the function. 2. Compute its derivative, and equate it with zero. Taking the log of the likelihood (often referred to as the log-likelihood) does not change its maximum (as the log is a monotonic function, and the likelihood positive), but it turns all products into sums which are much easier to deal with when you differentiate. Equating the derivative with zero is a standard way to find an extreme point. (To be precise you should verify that it really is a maximum and not a minimum, by verifying that the second derivative is negative.)

Returning to our binomial distribution, we can now plug in the definition and compute the log-likelihood: \begin{align} \hat{\theta}_{MLE} &= \operatorname*{argmax}_{\theta} \,P(D; \theta) \\ &= \operatorname*{argmax}_{\theta} \begin{pmatrix} n_H + n_T \\ n_H \end{pmatrix} \theta^{n_H} (1 - \theta)^{n_T} \\ &= \operatorname*{argmax}_{\theta} \,\log\begin{pmatrix} n_H + n_T \\ n_H \end{pmatrix} + n_H \cdot \log(\theta) + n_T \cdot \log(1 - \theta) \\ &= \operatorname*{argmax}_{\theta} \, n_H \cdot \log(\theta) + n_T \cdot \log(1 - \theta) \end{align} We can then solve for \(\theta\) by taking the derivative and equating it with zero. This results in \begin{align} \frac{n_H}{\theta} = \frac{n_T}{1 - \theta} \Longrightarrow n_H - n_H\theta = n_T\theta \Longrightarrow \theta = \frac{n_H}{n_H + n_T} \end{align} A nice sanity check is that \(\theta\in[0,1]\).

Assume you have a hunch that \(\theta\) is close to \(0.5\). But your sample size is small, so you don't trust your estimate. Simple fix: Add \(m\) imaginery throws that would result in \(\theta'\) (e.g. \(\theta = 0.5\)). Add \(m\) Heads and \(m\) Tails to your data. $$ \hat{\theta} = \frac{n_H + m}{n_H + n_T + 2m} $$ For large \(n\), this is an insignificant change. For small \(n\), it incorporates your "prior belief" about what \(\theta\) should be. Can we derive this formally?

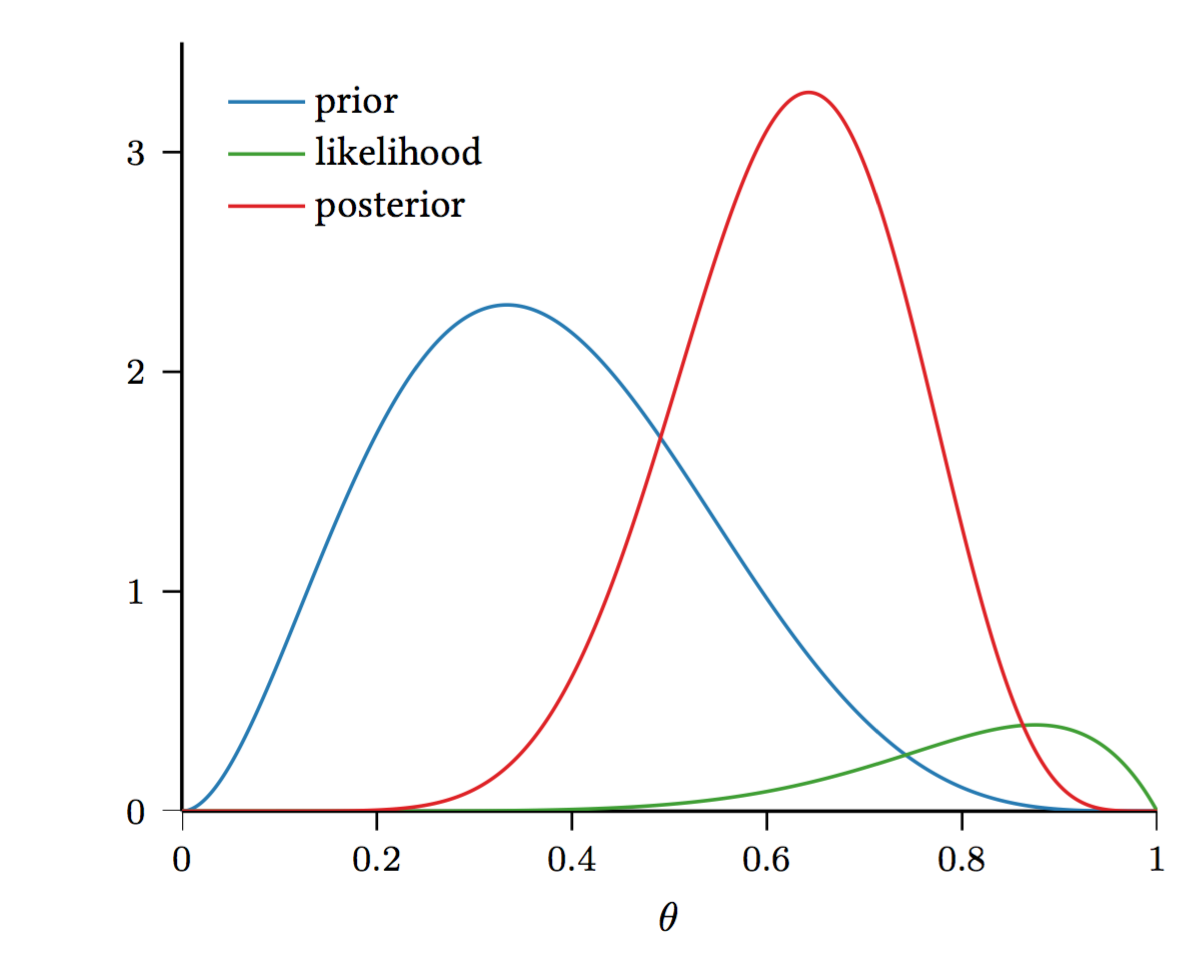

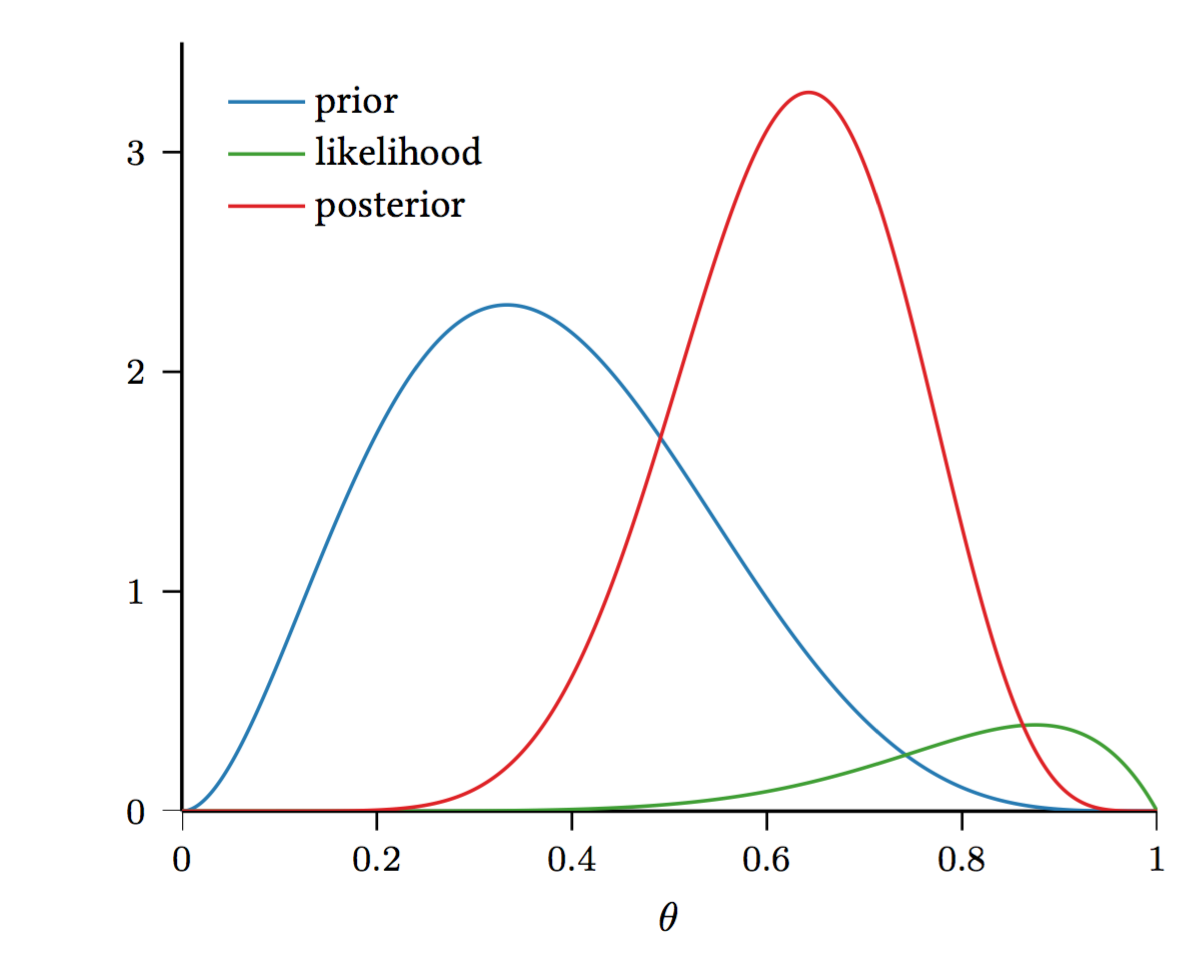

Model \(\theta\) as a random variable, drawn from a distribution \(P(\theta)\). Note that \(\theta\) is not a random variable associated with an event in a sample space. In frequentist statistics, this is forbidden. In Bayesian statistics, this is allowed and you can specify a prior belief \(P(\theta)\) defining what values you believe \(\theta\) is likely to take on.

Now, we can look at \(P(\theta \mid D) = \frac{P(D\mid \theta) P(\theta)}{P(D)}\) (recall Bayes Rule!), where

A natural choice for the prior \(P(\theta\)) is the Beta distribution: \begin{align} P(\theta) = \frac{\theta^{\alpha - 1}(1 - \theta)^{\beta - 1}}{B(\alpha, \beta)} \end{align} where \(B(\alpha, \beta) = \frac{\Gamma(\alpha) \Gamma(\beta)}{\Gamma(\alpha+\beta)}\) is the normalization constant (if this looks scary don't worry about it, it is just there to make sure everything sums to \(1\) and to scare children at Halloween). Note that here we only need a distribution over a single binary random variable \(\theta\). (The multivariate generalization of the Beta distribution is the Dirichlet distribution.)

Why is the Beta distribution a good fit?

So far, we have a distribution over \(\theta\). How can we get an estimate for \(\theta\)?

A few comments:

Note that MAP is only one way to get an estimator. There is much more information in \(P(\theta \mid D)\), and it seems like a shame to simply compute the mode and throw away all other information. A true Bayesian approach is to use the posterior predictive distribution directly to make prediction about the label \(Y\) of a test sample with features \(X\): $$ P(Y\mid D,X) = \int_{\theta}P(Y,\theta \mid D,X) d\theta = \int_{\theta} P(Y \mid \theta, D,X) P(\theta | D) d\theta $$ Unfortunately, the above is generally intractable in closed form and sampling techniques, such as Monte Carlo approximations, are used to approximate the distribution. A pleasant exception are Gaussian Processes, which we will cover later in this course.

Another exception is actually our coin toss example. To make predictions using \(\theta\) in our coin tossing example, we can use \begin{align} P(heads \mid D) =& \int_{\theta} P(heads, \theta \mid D) d\theta\\ =& \int_{\theta} P(heads \mid \theta, D) P(\theta \mid D) d\theta \ \ \ \ \ \ \textrm{(Chain rule: \(P(A,B|C)=P(A|B,C)P(B|C)\).)}\\ =& \int_{\theta} \theta P(\theta \mid D) d\theta\\ =&E\left[\theta|D\right]\\ =&\frac{n_H + \alpha}{n_H + \alpha + n_T + \beta} \end{align} Here, we used the fact that we defined \(P(heads \mid D, \theta)= P(heads \mid \theta)=\theta \) (this is only the case because we assumed that our data is drawn from a binomial distribution - in general this would not hold).

As always the differences are subtle. In MLE we maximize \(\log\left[P(D;\theta)\right]\) in MAP we maximize \(\log\left[P(D|\theta)\right]+\log\left[P(\theta)\right]\). So essentially in MAP we only add the term \(\log\left[P(\theta)\right]\) to our optimization. This term is independent of the data and penalizes if the parameters, \(\theta\) deviate too much from what we believe is reasonable. We will later revisit this as a form of regularization, where \(\log\left[P(\theta)\right]\) will be interpreted as a measure of classifier complexity.