Overall Architecture and Design Decisions

Computing the Values in the Harris Matrix

In order to do this we needed to find Ix and Iy and every point. We did this by convolving the 5x5 Gaussian with an x and y Sobel filter. Then we found the Harris values by multiplying these values over the given range. We noticed that the mask produced with the Harris values matched the mask in the solution code exactly, so we validated that this was indeed correct.

Here are the Harris masks produced by the first Graffiti and second Yosemite images, respectively:

For contrast, here are the two corresponding original images:

For contrast, here are the two corresponding original images:

These Harris values are intuitively accurate. The mask for the mountain contains a lot of softer intensities everywhere, which is consistent with the jagged edges and corners that cover the mountain face.

In the Graffiti image, the insignia below the chicken comprises an intensity hotspot, which is consistent with the amount of corners on the letters/numbers.

These Harris values are intuitively accurate. The mask for the mountain contains a lot of softer intensities everywhere, which is consistent with the jagged edges and corners that cover the mountain face.

In the Graffiti image, the insignia below the chicken comprises an intensity hotspot, which is consistent with the amount of corners on the letters/numbers.

Computing the Local Minimum and Thresholding

We calculated the mean and standard deviation of the Harris values in the matrix and set the threshold at one standard deviation above the mean. We also found local maxima in a 5x5 window to further threshold the features.Computing the Characteristic Orientation

In order to calculate the characteristic orientation of an image, we used the direction of the principal eigenvector of the Harris Matrix in radians. So we solve for the determinant of the matrix [A-lambda, B; B, C-lambda]. This gives us a quadratic equation which we solve and take the larger root. Then, to solve for the principal eigenvector, we solve [A,B;B,C][x,y] = lambda[x,y] for x and y. Then, as a sanity check, we substituted the values back in for x and y and assured ourselves that the equation was satisfied. (we didn't actually solve the equation for this, we found y in terms of x, but that is just a slight modification). Then, to solve for the the angle, we took the arctangent of y divided by x. This gave us the principal eigenvector, which we validated by inspection. One issue arised here that we had to resolve. The arctangent function returns a value in the range [-pi,pi] which means that it is possible that if a feature is reflected over the x-axis the eigenvectors would be off by pi. To solve this, if the x derivative of the intensity at the point is negative, we added pi to the value returned by arctangent. This makes our angle consistent among different features.Computing the MOPS descriptors

We applied a homography that translated the image to the origin, rotated it clockwise by the characteristic angle (so that it lies on the positive x-axis), used WarpGlobal to interpolate the values, scaled it to 8x8, and then offset it so that it was back on the canvas.Strengths and Weaknesses

The stengths in our program are the correct calculation of the Harris values, computing the descriptors, making the features invariant to illumination changes. The Harris mask that was produced by our program matches the one produced by the solution code and the features detected are unique points in the image. We also seem to compute the homographies correctly. We succeed in making the image scale invariant by taking the 8x8 patch that was produced, subtracting the mean from each pixel and dividing by the standard deviation.

The biggest weakness is the canonical orientation. Currently we use the angle of the max with some additional logic to determine direction. However, the MOPs paper uses a different approach where the image is first blurred and then Ix and Iy are used as components of the vector from which the angle is derived.

Another big weakness is that our features aren't scale invariant, but that is because we did not have the time to do the extra credit, so it makes sense that our features aren't scale invariant.

Results

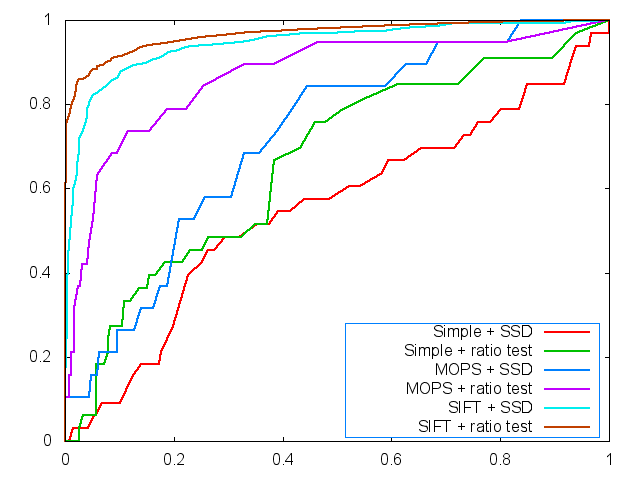

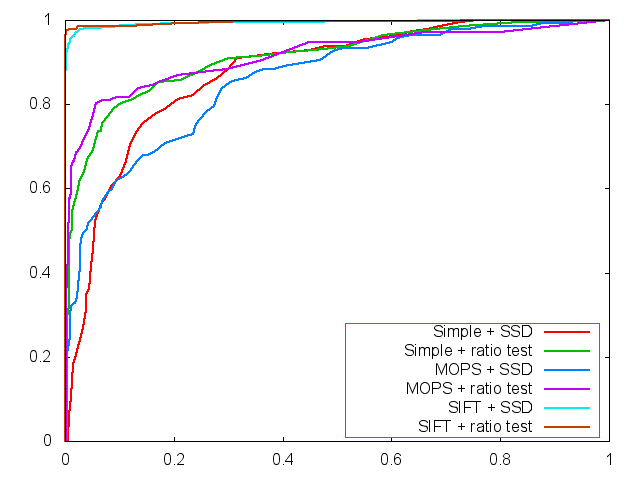

As predicted, both feature matchings performed best with a combination of MOPS (ignoring SIFT for now), Harris features, and the ratio test for matching. Consider the following charts:

Graffiti images 1 and 2

Yosemite images 1 and 2

Furthermore, here are the average AUC's for the different benchmarks:

Furthermore, here are the average AUC's for the different benchmarks:

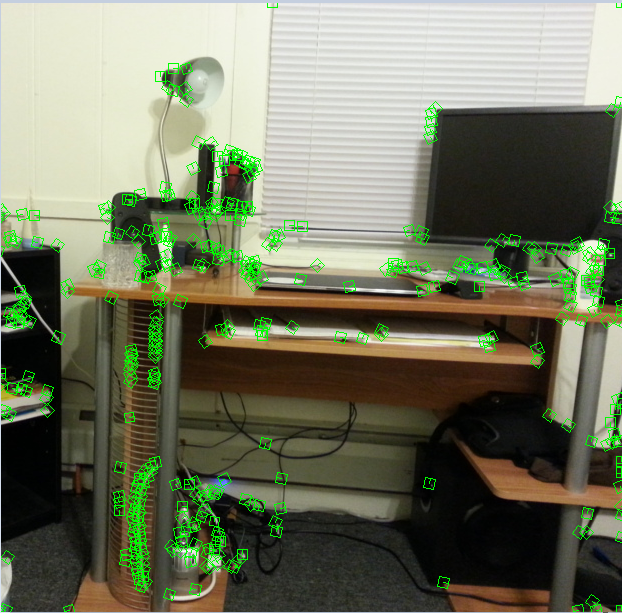

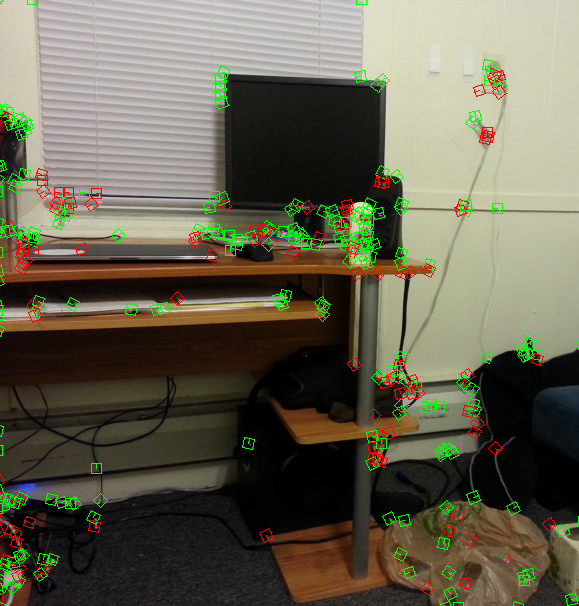

My own experiment

I took two photos of my desk from slightly different angles and positions. The top represents the original query photo with all features selected, while the bottom shows the matched features.

As you can see below, there are quite a lot of matches between the two. There are also a lot of false positives, such as all the matches to the right of the desk.

However, this must be taken with a grain of salt, since a ratio test

would probably eliminate a lot of these errors.

| Image Directory | Descriptor Type | Distance | Average AUC | |

| Bikes | Simple | SSD | 0.4212 | |

| Simple | Ratio | 0.511 | ||

| MOPS | SSD | 0.591 | ||

| MOPS | Ratio | 0.634 | ||

| Wall | Simple | SSD | 0.408 | |

| Simple | Ratio | 0.549 | ||

| MOPS | SSD | 0.591 | ||

| MOPS | Ratio | 0.616 | ||

| Leuven | Simple | SSD | 0.246 | |

| Simple | Ratio | 0.532 | ||

| MOPS | SSD | 0.591 | ||

| MOPS | Ratio | 0.639 | ||